Applying AI to Solve Big Memory Problems

Artificial Intelligence (AI) is transforming industries at every level, but the spotlight often shines on AI that lives at the application layer—think recommendation engines, chatbots, and autonomous systems. These innovations are exciting, but they represent only part of AI’s potential. At MEXT, we’re flipping the script by applying AI below the application layer, directly into the heart of infrastructure. This is where modern AI concepts meet classic performance bottlenecks, unlocking new levels of efficiency for data centers.

More Memory, More Problems

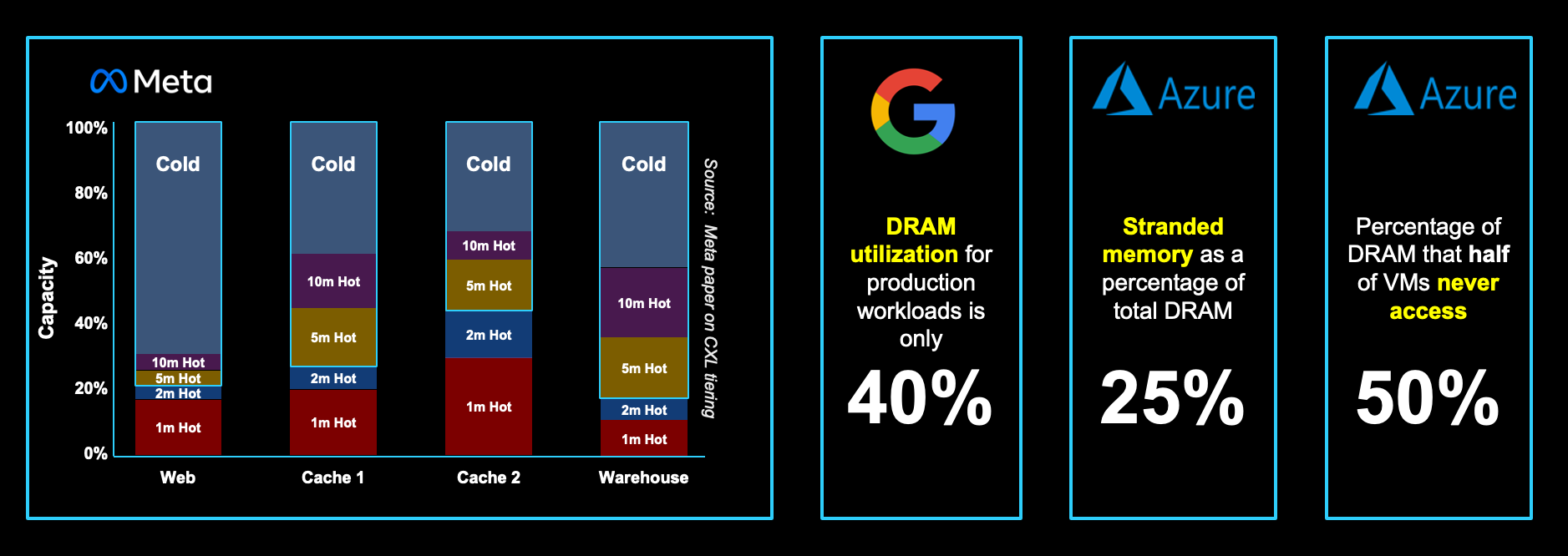

Today’s applications demand increasingly larger amounts of memory to operate efficiently. At the same time, the cost of server memory (DRAM) continues to rise. Given its high expense, one would expect that all provisioned memory is both necessary and optimally utilized. However, in many business environments, this is far from reality. Research from leading cloud providers indicates that memory utilization for both internal and external workloads often falls to 50% or lower.1 This suggests that a significant portion of allocated DRAM—sometimes more than half—remains idle for extended periods, which is highly inefficient from a microprocessor's perspective. This underutilized “cold memory” means that organizations are provisioning far more DRAM than they actually need, ultimately resulting in wasted resources and potentially billions of dollars in unnecessary expenditures.

Rethinking Where AI Is Deployed

At MEXT, we set out to solve this memory utilization issue and radically lower the cost of computing. We achieve this by leveraging low-cost Flash as an extension of a system’s memory, circumventing the need for too much DRAM. In other words, we get Flash to transparently look like and perform as if it is part of main memory. Sounds impossible? With advanced AI techniques, it’s not!

The first step was rethinking where AI could be deployed. Instead of having it sit above the application layer (as is the case for most all “AI use cases” out there today), we thought: what if we implement it within the memory subsystem itself—below the application layer? This is how the MEXT AI engine was born.

MEXT AI

MEXT software begins by offloading cold memory pages from DRAM into Flash. The AI engine continuously analyzes the memory page access patterns associated with the running workload, learning which memory pages are likely to be needed in the near future. From there, it proactively pushes these memory pages from Flash back into DRAM, before they are needed. From the application’s perspective, the needed memory pages never left DRAM—keeping performance intact, but all within a far smaller DRAM footprint. This translates to compelling efficiencies—up to 40% lower costs.

This AI-driven memory management operates transparently, ensuring seamless application performance while enhancing infrastructure-level efficiency.

Why AI Below the Application Layer Matters

AI solutions above the application layer undoubtedly drive value, but their effectiveness is often constrained by the underlying infrastructure. A poorly optimized memory architecture can bottleneck even the most advanced AI application. By embedding AI at the infrastructure level, MEXT is solving these systemic issues at the root.

Looking Ahead

As data demands grow, the need for smarter infrastructure is more pressing than ever. MEXT represents a shift in how we think about AI’s role in technology stacks, proving that the biggest gains often come from the most foundational improvements.

AI isn’t just for chatbots and autonomous cars. It’s for memory management, storage optimization, and compute efficiency. It’s for solving the problems that data centers have been wrestling with for decades. With MEXT, the future of AI isn’t just above the application layer—it’s beneath it.

Sources: 1. https://dl.acm.org/doi/pdf/10.1145/3578338.3593553

Get the Latest

Sign up to receive the latest news about MEXT.

Contact Us

Connect with a MEXT representative or sign up for a free POC.