Memory Challenges vs. the Industry

In our last blog post, we dove into the top 3 challenges associated with server memory (DRAM): the memory cliff, rising costs, and poor utilization. In the recent past, the industry has made numerous attempts to address these challenges. Some approaches have worked in some capacity, while others have made promises they couldn’t keep.

Swap

Swap was introduced as a solution to mitigate memory exhaustion by offloading infrequently used data from RAM to disk (originally hard drives and now SSDs), effectively extending available memory. In theory, this prevents applications from crashing due to out-of-memory conditions and smooths out utilization spikes. However, in practice, swap has struggled to solve the fundamental challenges of memory cliffs and efficient utilization. The core issue lies in the stark performance disparity between RAM and SSDs—swapping incurs significant latency (500x that of DRAM). When a system begins to swap heavily, it often enters a state of thrashing, where the overhead of moving pages in and out of disk outweighs any benefit, leading to severe slowdowns rather than graceful degradation. On top of this, swap is unable to intelligently manage the fact that most “swapped out” memory pages eventually do have to get read back into memory—resulting in additional latency that severely worsens application performance. In fact, performance using swap can be so poor that many applications block swap from ever being able to occur.

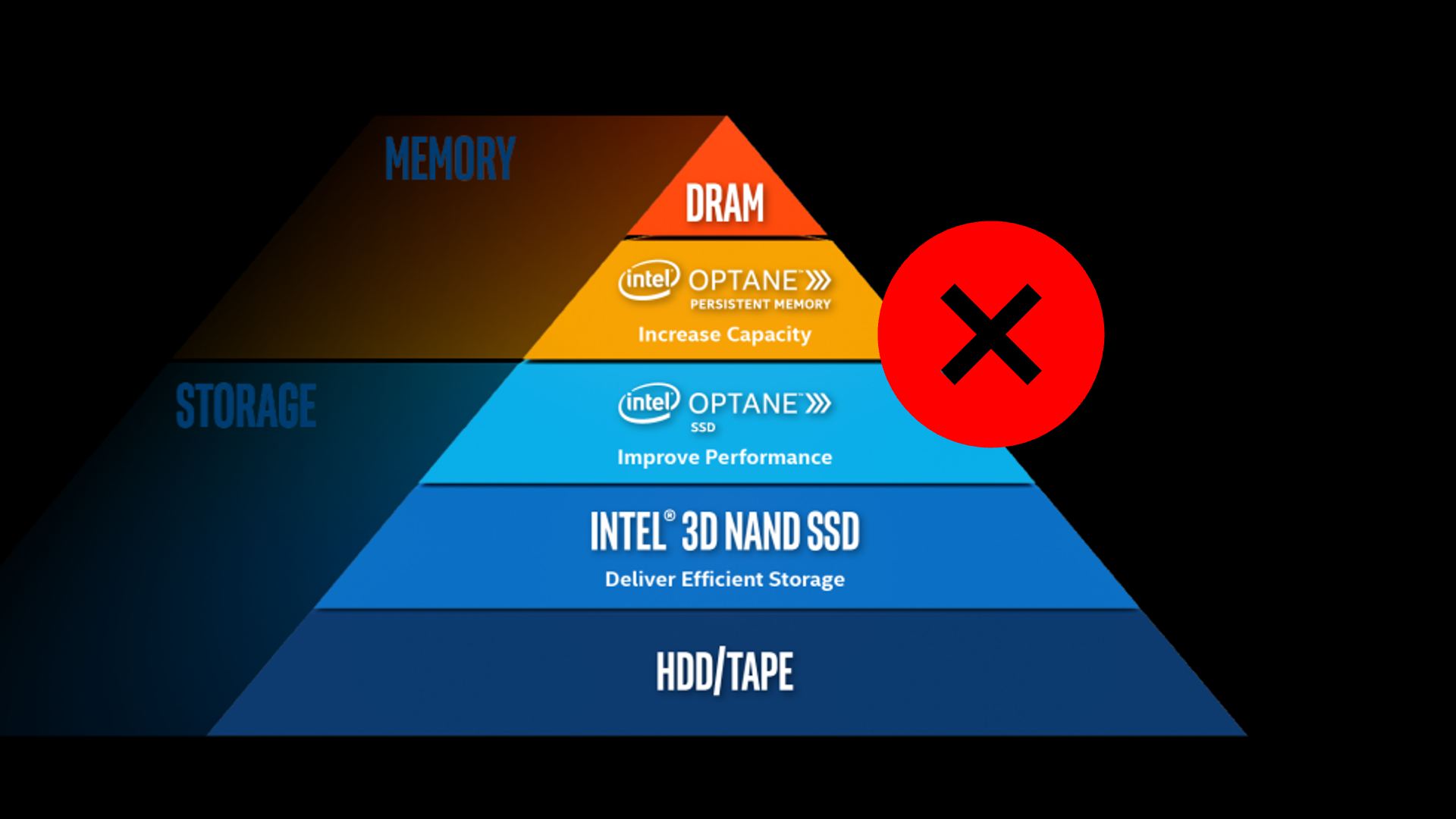

Optane

Intel Optane was an advanced memory and storage technology designed to bridge the gap between traditional DRAM and NAND-based storage by promising a unique combination of high speed, low latency, and persistent storage capabilities. The idea was for Optane memory modules to be used alongside traditional DRAM to expand memory capacity and improve system performance without the high cost associated with large DRAM deployments. However, despite its noble premise, Optane required too many changes to applications and environments, reduced the capacity and bandwidth of DRAM, and required a new semiconductor technology which was expensive to develop and innovate. Intel announced the discontinuation of Optane products in 2022.2

CXL

When it first came out in 2019, Compute Express Link (CXL) interconnect technology promised low-latency, cache-coherent access to remote memory. This meant that data centers could leverage “CXL memory pooling devices” that allowed compute resources to seamlessly access shared banks of memory, allocated as needed—reducing the amount of expensive DRAM needed per system. This would improve the economics of running next-gen applications like AI, HPC, and big data analytics.

As it turned out, the promises of CXL didn’t quite match up to the realities. In 2023, a team of Google researchers—Philip Levis, Kun Lin, and Amy Tai—put out a paper titled “A Case Against CXL Memory Pooling” that illustrated the challenges associated with real-world implementations of CXL. When the paper first came out, it was controversial. Today, its arguments have proven themselves to be true.

The paper made 3 key points. First, that CXL memory pooling doesn’t actually yield better economics, because the price of the devices themselves plus the parallel networking infrastructure offsets whatever cost reduction in DRAM can be achieved. Second, it forces application rewrites and adds software complexity, because the memory page retrieval process involves conditionally copying remote / CXL memory into local memory. And third, typical VMs are too small for CXL memory pooling to make sense.3

A New Path Forward

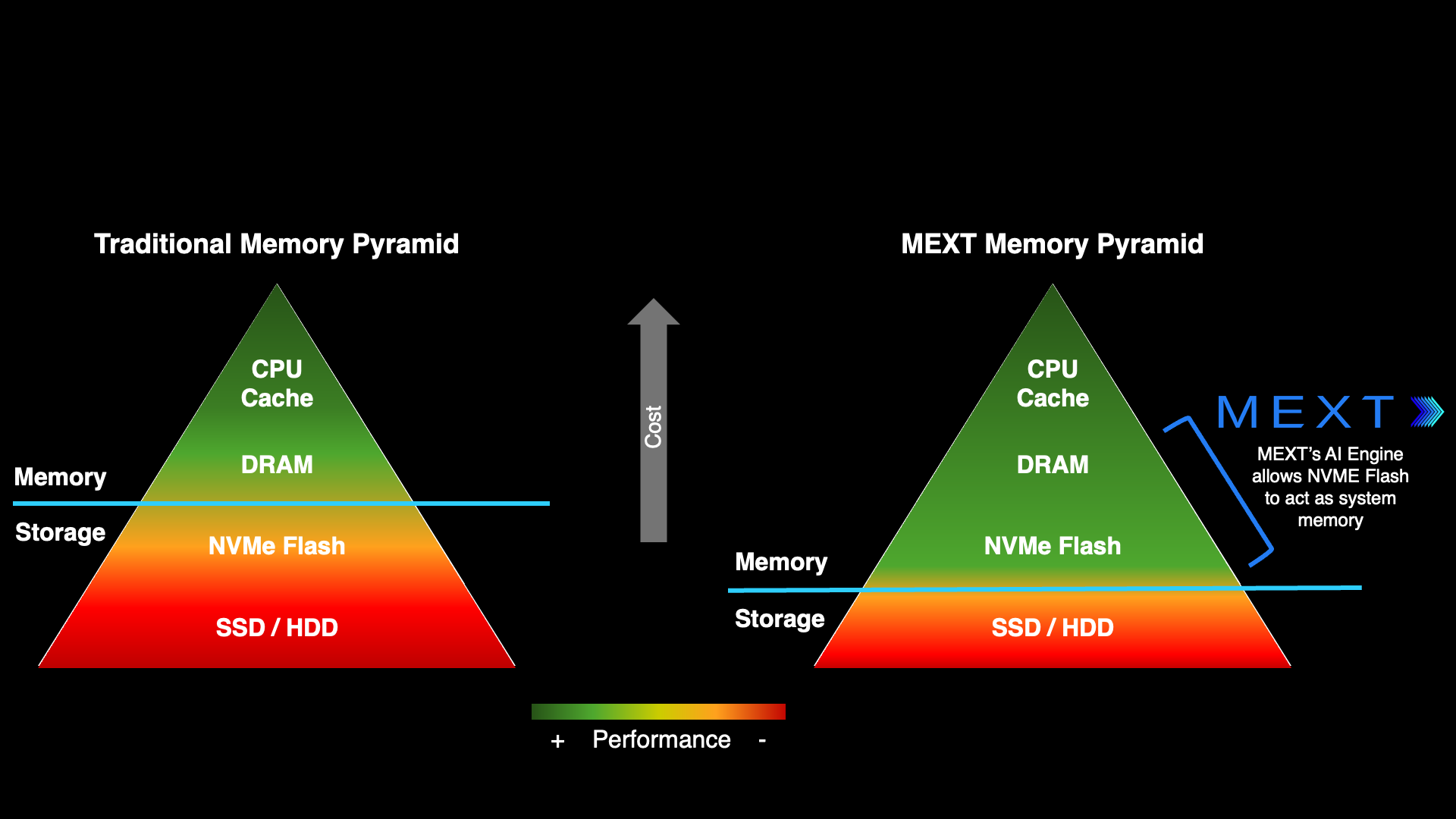

What if there was a solution that could address the top memory challenges, but was sufficiently low-latency (distinct from swap), seamless to implement (distinct from Optane), and actually more cost-efficient (distinct from CXL memory pooling)? Enter MEXT.

MEXT is a software solution that 1) identifies hot (actively utilized) vs. cold (not currently utilized) memory pages contained within DRAM, 2) offloads cold pages from DRAM to a 20x lower cost location, Flash, 3) leverages AI to continually predict which pages in Flash might be requested by the application, and 4) proactively pushes those pages back to DRAM before the application needs them. As a result, the application stays performant because from its perspective, the relevant memory pages are already resident in DRAM. This lets customers run applications using a far smaller DRAM footprint.

MEXT works across a wide range of popular applications (Spark, Redis, Neo4j, Weaviate, Memcached, and beyond), installs in less than 20 minutes with no application rewrites, and yields up to 40% lower operational costs—on-premises or in any cloud environment. Big memory challenges have finally met their match.

Get the Latest

Sign up to receive the latest news about MEXT.

Contact Us

Connect with a MEXT representative or sign up for a free POC.